dbt

There are 2 sources that provide integration with dbt

| Source Module | Documentation |

| |

|

Ingesting metadata from dbt requires either using the dbt module or the dbt-cloud module.

Concept Mapping

| Source Concept | DataHub Concept | Notes |

|---|---|---|

| Source | Dataset | Subtype Source |

| Seed | Dataset | Subtype Seed |

| Model | Dataset | Subtype Model |

| Snapshot | Dataset | Subtype Snapshot |

| Test | Assertion | |

| Test Result | Assertion Run Result | |

| Model Runs | DataProcessInstance |

Note:

- You must run ingestion for both dbt and your data warehouse (target platform). They can be run in any order.

- It generates column lineage between the

dbtnodes (e.g. when a model/snapshot depends on a dbt source or ephemeral model) as well as lineage between thedbtnodes and the underlying target platform nodes (e.g. BigQuery Table -> dbt source, dbt model -> BigQuery table/view). - It automatically generates "sibling" relationships between the dbt nodes and the target / data warehouse nodes. These nodes will show up in the UI with both platform logos.

- We also support automated actions (like add a tag, term or owner) based on properties defined in dbt meta.

Module dbt

Important Capabilities

| Capability | Status | Notes |

|---|---|---|

| Column-level Lineage | ✅ | Enabled by default, configure using include_column_lineage |

| Detect Deleted Entities | ✅ | Enabled via stateful ingestion |

| Table-Level Lineage | ✅ | Enabled by default |

Setup

The artifacts used by this source are:

- dbt manifest file

- This file contains model, source, tests and lineage data.

- dbt catalog file

- This file contains schema data.

- dbt does not record schema data for Ephemeral models, as such datahub will show Ephemeral models in the lineage, however there will be no associated schema for Ephemeral models

- dbt sources file

- This file contains metadata for sources with freshness checks.

- We transfer dbt's freshness checks to DataHub's last-modified fields.

- Note that this file is optional – if not specified, we'll use time of ingestion instead as a proxy for time last-modified.

- dbt run_results file

- This file contains metadata from the result of a dbt run, e.g. dbt test

- When provided, we transfer dbt test run results into assertion run events to see a timeline of test runs on the dataset

To generate these files, we recommend this workflow for dbt build and datahub ingestion.

dbt source snapshot-freshness

dbt build

cp target/run_results.json target/run_results_backup.json

dbt docs generate

cp target/run_results_backup.json target/run_results.json

# Run datahub ingestion, pointing at the files in the target/ directory

The necessary artifact files will then appear in the target/ directory of your dbt project.

We also have guides on handling more complex dbt orchestration techniques and multi-project setups below.

This warning usually appears when the catalog.json file was not generated by a dbt docs generate command.

Most other dbt commands generate a partial catalog file, which may impact the completeness of the metadata in ingested into DataHub.

Following the above workflow should ensure that the catalog file is generated correctly.

CLI based Ingestion

Install the Plugin

The dbt source works out of the box with acryl-datahub.

Starter Recipe

Check out the following recipe to get started with ingestion! See below for full configuration options.

For general pointers on writing and running a recipe, see our main recipe guide.

source:

type: "dbt"

config:

# Coordinates

# To use this as-is, set the environment variable DBT_PROJECT_ROOT to the root folder of your dbt project

manifest_path: "${DBT_PROJECT_ROOT}/target/manifest_file.json"

catalog_path: "${DBT_PROJECT_ROOT}/target/catalog_file.json"

sources_path: "${DBT_PROJECT_ROOT}/target/sources_file.json" # optional for freshness

run_results_paths:

- "${DBT_PROJECT_ROOT}/target/run_results.json" # optional for recording dbt test results after running dbt test

# Options

target_platform: "my_target_platform_id" # e.g. bigquery/postgres/etc.

# sink configs

Config Details

- Options

- Schema

Note that a . is used to denote nested fields in the YAML recipe.

| Field | Description |

|---|---|

catalog_path ✅ string | Path to dbt catalog JSON. See https://docs.getdbt.com/reference/artifacts/catalog-json Note this can be a local file or a URI. |

manifest_path ✅ string | Path to dbt manifest JSON. See https://docs.getdbt.com/reference/artifacts/manifest-json Note this can be a local file or a URI. |

target_platform ✅ string | The platform that dbt is loading onto. (e.g. bigquery / redshift / postgres etc.) |

column_meta_mapping object | mapping rules that will be executed against dbt column meta properties. Refer to the section below on dbt meta automated mappings. Default: {} |

convert_column_urns_to_lowercase boolean | When enabled, converts column URNs to lowercase to ensure cross-platform compatibility. If target_platform is Snowflake, the default is True. Default: False |

enable_meta_mapping boolean | When enabled, applies the mappings that are defined through the meta_mapping directives. Default: True |

enable_owner_extraction boolean | When enabled, ownership info will be extracted from the dbt meta Default: True |

enable_query_tag_mapping boolean | When enabled, applies the mappings that are defined through the query_tag_mapping directives. Default: True |

include_column_lineage boolean | When enabled, column-level lineage will be extracted from the dbt node definition. Requires infer_dbt_schemas to be enabled. If you run into issues where the column name casing does not match up with properly, providing a datahub_api or using the rest sink will improve accuracy. Default: True |

include_compiled_code boolean | When enabled, includes the compiled code in the emitted metadata. Default: True |

include_env_in_assertion_guid boolean | Prior to version 0.9.4.2, the assertion GUIDs did not include the environment. If you're using multiple dbt ingestion that are only distinguished by env, then you should set this flag to True. Default: False |

incremental_lineage boolean | When enabled, emits incremental/patch lineage for non-dbt entities. When disabled, re-states lineage on each run. Default: True |

infer_dbt_schemas boolean | When enabled, schemas will be inferred from the dbt node definition. Default: True |

meta_mapping object | mapping rules that will be executed against dbt meta properties. Refer to the section below on dbt meta automated mappings. Default: {} |

only_include_if_in_catalog boolean | [experimental] If true, only include nodes that are also present in the catalog file. This is useful if you only want to include models that have been built by the associated run. Default: False |

owner_extraction_pattern string | Regex string to extract owner from the dbt node using the (?P<name>...) syntax of the match object, where the group name must be owner. Examples: (1)r"(?P<owner>(.*)): (\w+) (\w+)" will extract jdoe as the owner from "jdoe: John Doe" (2) r"@(?P<owner>(.*))" will extract alice as the owner from "@alice". |

platform_instance string | The instance of the platform that all assets produced by this recipe belong to. This should be unique within the platform. See https://datahubproject.io/docs/platform-instances/ for more details. |

prefer_sql_parser_lineage boolean | Normally we use dbt's metadata to generate table lineage. When enabled, we prefer results from the SQL parser when generating lineage instead. This can be useful when dbt models reference tables directly, instead of using the ref() macro. This requires that skip_sources_in_lineage is enabled. Default: False |

query_tag_mapping object | mapping rules that will be executed against dbt query_tag meta properties. Refer to the section below on dbt meta automated mappings. Default: {} |

skip_sources_in_lineage boolean | [Experimental] When enabled, dbt sources will not be included in the lineage graph. Requires that entities_enabled.sources is set to NO. This is mainly useful when you have multiple, interdependent dbt projects. Default: False |

sources_path string | Path to dbt sources JSON. See https://docs.getdbt.com/reference/artifacts/sources-json. If not specified, last-modified fields will not be populated. Note this can be a local file or a URI. |

strip_user_ids_from_email boolean | Whether or not to strip email id while adding owners using dbt meta actions. Default: False |

tag_prefix string | Prefix added to tags during ingestion. Default: dbt: |

target_platform_instance string | The platform instance for the platform that dbt is operating on. Use this if you have multiple instances of the same platform (e.g. redshift) and need to distinguish between them. |

test_warnings_are_errors boolean | When enabled, dbt test warnings will be treated as failures. Default: False |

use_identifiers boolean | Use model identifier instead of model name if defined (if not, default to model name). Default: False |

write_semantics string | Whether the new tags, terms and owners to be added will override the existing ones added only by this source or not. Value for this config can be "PATCH" or "OVERRIDE" Default: PATCH |

env string | Environment to use in namespace when constructing URNs. Default: PROD |

aws_connection AwsConnectionConfig | When fetching manifest files from s3, configuration for aws connection details |

aws_connection.aws_access_key_id string | AWS access key ID. Can be auto-detected, see the AWS boto3 docs for details. |

aws_connection.aws_advanced_config object | Advanced AWS configuration options. These are passed directly to botocore.config.Config. |

aws_connection.aws_endpoint_url string | The AWS service endpoint. This is normally constructed automatically, but can be overridden here. |

aws_connection.aws_profile string | The named profile to use from AWS credentials. Falls back to default profile if not specified and no access keys provided. Profiles are configured in ~/.aws/credentials or ~/.aws/config. |

aws_connection.aws_proxy map(str,string) | |

aws_connection.aws_region string | AWS region code. |

aws_connection.aws_retry_mode Enum | One of: "legacy", "standard", "adaptive" Default: standard |

aws_connection.aws_retry_num integer | Number of times to retry failed AWS requests. See the botocore.retry docs for details. Default: 5 |

aws_connection.aws_secret_access_key string | AWS secret access key. Can be auto-detected, see the AWS boto3 docs for details. |

aws_connection.aws_session_token string | AWS session token. Can be auto-detected, see the AWS boto3 docs for details. |

aws_connection.read_timeout number | The timeout for reading from the connection (in seconds). Default: 60 |

aws_connection.aws_role One of string, array | AWS roles to assume. If using the string format, the role ARN can be specified directly. If using the object format, the role can be specified in the RoleArn field and additional available arguments are the same as boto3's STS.Client.assume_role. |

aws_connection.aws_role.union One of string, AwsAssumeRoleConfig | |

aws_connection.aws_role.union.RoleArn ❓ string | ARN of the role to assume. |

aws_connection.aws_role.union.ExternalId string | External ID to use when assuming the role. |

entities_enabled DBTEntitiesEnabled | Controls for enabling / disabling metadata emission for different dbt entities (models, test definitions, test results, etc.) Default: {'models': 'YES', 'sources': 'YES', 'seeds': 'YES'... |

entities_enabled.model_performance Enum | Emit model performance metadata when set to Yes or Only. Only supported with dbt core. Default: YES |

entities_enabled.models Enum | Emit metadata for dbt models when set to Yes or Only Default: YES |

entities_enabled.seeds Enum | Emit metadata for dbt seeds when set to Yes or Only Default: YES |

entities_enabled.snapshots Enum | Emit metadata for dbt snapshots when set to Yes or Only Default: YES |

entities_enabled.sources Enum | Emit metadata for dbt sources when set to Yes or Only Default: YES |

entities_enabled.test_definitions Enum | Emit metadata for test definitions when enabled when set to Yes or Only Default: YES |

entities_enabled.test_results Enum | Emit metadata for test results when set to Yes or Only Default: YES |

git_info GitReference | Reference to your git location to enable easy navigation from DataHub to your dbt files. |

git_info.repo ❓ string | Name of your Git repo e.g. https://github.com/datahub-project/datahub or https://gitlab.com/gitlab-org/gitlab. If organization/repo is provided, we assume it is a GitHub repo. |

git_info.branch string | Branch on which your files live by default. Typically main or master. This can also be a commit hash. Default: main |

git_info.url_subdir string | Prefix to prepend when generating URLs for files - useful when files are in a subdirectory. Only affects URL generation, not git operations. |

git_info.url_template string | Template for generating a URL to a file in the repo e.g. '{repo_url}/blob/{branch}/{file_path}'. We can infer this for GitHub and GitLab repos, and it is otherwise required.It supports the following variables: {repo_url}, {branch}, {file_path} |

node_name_pattern AllowDenyPattern | regex patterns for dbt model names to filter in ingestion. Default: {'allow': ['.*'], 'deny': [], 'ignoreCase': True} |

node_name_pattern.ignoreCase boolean | Whether to ignore case sensitivity during pattern matching. Default: True |

node_name_pattern.allow array | List of regex patterns to include in ingestion Default: ['.*'] |

node_name_pattern.allow.string string | |

node_name_pattern.deny array | List of regex patterns to exclude from ingestion. Default: [] |

node_name_pattern.deny.string string | |

run_results_paths array | Path to output of dbt test run as run_results files in JSON format. If not specified, test execution results and model performance metadata will not be populated in DataHub.If invoking dbt multiple times, you can provide paths to multiple run result files. See https://docs.getdbt.com/reference/artifacts/run-results-json. Default: [] |

run_results_paths.string string | |

stateful_ingestion StatefulStaleMetadataRemovalConfig | DBT Stateful Ingestion Config. |

stateful_ingestion.enabled boolean | Whether or not to enable stateful ingest. Default: True if a pipeline_name is set and either a datahub-rest sink or datahub_api is specified, otherwise False Default: False |

stateful_ingestion.remove_stale_metadata boolean | Soft-deletes the entities present in the last successful run but missing in the current run with stateful_ingestion enabled. Default: True |

The JSONSchema for this configuration is inlined below.

{

"title": "DBTCoreConfig",

"description": "Base configuration class for stateful ingestion for source configs to inherit from.",

"type": "object",

"properties": {

"incremental_lineage": {

"title": "Incremental Lineage",

"description": "When enabled, emits incremental/patch lineage for non-dbt entities. When disabled, re-states lineage on each run.",

"default": true,

"type": "boolean"

},

"env": {

"title": "Env",

"description": "Environment to use in namespace when constructing URNs.",

"default": "PROD",

"type": "string"

},

"platform_instance": {

"title": "Platform Instance",

"description": "The instance of the platform that all assets produced by this recipe belong to. This should be unique within the platform. See https://datahubproject.io/docs/platform-instances/ for more details.",

"type": "string"

},

"stateful_ingestion": {

"title": "Stateful Ingestion",

"description": "DBT Stateful Ingestion Config.",

"allOf": [

{

"$ref": "#/definitions/StatefulStaleMetadataRemovalConfig"

}

]

},

"target_platform": {

"title": "Target Platform",

"description": "The platform that dbt is loading onto. (e.g. bigquery / redshift / postgres etc.)",

"type": "string"

},

"target_platform_instance": {

"title": "Target Platform Instance",

"description": "The platform instance for the platform that dbt is operating on. Use this if you have multiple instances of the same platform (e.g. redshift) and need to distinguish between them.",

"type": "string"

},

"use_identifiers": {

"title": "Use Identifiers",

"description": "Use model identifier instead of model name if defined (if not, default to model name).",

"default": false,

"type": "boolean"

},

"entities_enabled": {

"title": "Entities Enabled",

"description": "Controls for enabling / disabling metadata emission for different dbt entities (models, test definitions, test results, etc.)",

"default": {

"models": "YES",

"sources": "YES",

"seeds": "YES",

"snapshots": "YES",

"test_definitions": "YES",

"test_results": "YES",

"model_performance": "YES"

},

"allOf": [

{

"$ref": "#/definitions/DBTEntitiesEnabled"

}

]

},

"prefer_sql_parser_lineage": {

"title": "Prefer Sql Parser Lineage",

"description": "Normally we use dbt's metadata to generate table lineage. When enabled, we prefer results from the SQL parser when generating lineage instead. This can be useful when dbt models reference tables directly, instead of using the ref() macro. This requires that `skip_sources_in_lineage` is enabled.",

"default": false,

"type": "boolean"

},

"skip_sources_in_lineage": {

"title": "Skip Sources In Lineage",

"description": "[Experimental] When enabled, dbt sources will not be included in the lineage graph. Requires that `entities_enabled.sources` is set to `NO`. This is mainly useful when you have multiple, interdependent dbt projects. ",

"default": false,

"type": "boolean"

},

"tag_prefix": {

"title": "Tag Prefix",

"description": "Prefix added to tags during ingestion.",

"default": "dbt:",

"type": "string"

},

"node_name_pattern": {

"title": "Node Name Pattern",

"description": "regex patterns for dbt model names to filter in ingestion.",

"default": {

"allow": [

".*"

],

"deny": [],

"ignoreCase": true

},

"allOf": [

{

"$ref": "#/definitions/AllowDenyPattern"

}

]

},

"meta_mapping": {

"title": "Meta Mapping",

"description": "mapping rules that will be executed against dbt meta properties. Refer to the section below on dbt meta automated mappings.",

"default": {},

"type": "object"

},

"column_meta_mapping": {

"title": "Column Meta Mapping",

"description": "mapping rules that will be executed against dbt column meta properties. Refer to the section below on dbt meta automated mappings.",

"default": {},

"type": "object"

},

"enable_meta_mapping": {

"title": "Enable Meta Mapping",

"description": "When enabled, applies the mappings that are defined through the meta_mapping directives.",

"default": true,

"type": "boolean"

},

"query_tag_mapping": {

"title": "Query Tag Mapping",

"description": "mapping rules that will be executed against dbt query_tag meta properties. Refer to the section below on dbt meta automated mappings.",

"default": {},

"type": "object"

},

"enable_query_tag_mapping": {

"title": "Enable Query Tag Mapping",

"description": "When enabled, applies the mappings that are defined through the `query_tag_mapping` directives.",

"default": true,

"type": "boolean"

},

"write_semantics": {

"title": "Write Semantics",

"description": "Whether the new tags, terms and owners to be added will override the existing ones added only by this source or not. Value for this config can be \"PATCH\" or \"OVERRIDE\"",

"default": "PATCH",

"type": "string"

},

"strip_user_ids_from_email": {

"title": "Strip User Ids From Email",

"description": "Whether or not to strip email id while adding owners using dbt meta actions.",

"default": false,

"type": "boolean"

},

"enable_owner_extraction": {

"title": "Enable Owner Extraction",

"description": "When enabled, ownership info will be extracted from the dbt meta",

"default": true,

"type": "boolean"

},

"owner_extraction_pattern": {

"title": "Owner Extraction Pattern",

"description": "Regex string to extract owner from the dbt node using the `(?P<name>...) syntax` of the [match object](https://docs.python.org/3/library/re.html#match-objects), where the group name must be `owner`. Examples: (1)`r\"(?P<owner>(.*)): (\\w+) (\\w+)\"` will extract `jdoe` as the owner from `\"jdoe: John Doe\"` (2) `r\"@(?P<owner>(.*))\"` will extract `alice` as the owner from `\"@alice\"`.",

"type": "string"

},

"include_env_in_assertion_guid": {

"title": "Include Env In Assertion Guid",

"description": "Prior to version 0.9.4.2, the assertion GUIDs did not include the environment. If you're using multiple dbt ingestion that are only distinguished by env, then you should set this flag to True.",

"default": false,

"type": "boolean"

},

"convert_column_urns_to_lowercase": {

"title": "Convert Column Urns To Lowercase",

"description": "When enabled, converts column URNs to lowercase to ensure cross-platform compatibility. If `target_platform` is Snowflake, the default is True.",

"default": false,

"type": "boolean"

},

"test_warnings_are_errors": {

"title": "Test Warnings Are Errors",

"description": "When enabled, dbt test warnings will be treated as failures.",

"default": false,

"type": "boolean"

},

"infer_dbt_schemas": {

"title": "Infer Dbt Schemas",

"description": "When enabled, schemas will be inferred from the dbt node definition.",

"default": true,

"type": "boolean"

},

"include_column_lineage": {

"title": "Include Column Lineage",

"description": "When enabled, column-level lineage will be extracted from the dbt node definition. Requires `infer_dbt_schemas` to be enabled. If you run into issues where the column name casing does not match up with properly, providing a datahub_api or using the rest sink will improve accuracy.",

"default": true,

"type": "boolean"

},

"include_compiled_code": {

"title": "Include Compiled Code",

"description": "When enabled, includes the compiled code in the emitted metadata.",

"default": true,

"type": "boolean"

},

"manifest_path": {

"title": "Manifest Path",

"description": "Path to dbt manifest JSON. See https://docs.getdbt.com/reference/artifacts/manifest-json Note this can be a local file or a URI.",

"type": "string"

},

"catalog_path": {

"title": "Catalog Path",

"description": "Path to dbt catalog JSON. See https://docs.getdbt.com/reference/artifacts/catalog-json Note this can be a local file or a URI.",

"type": "string"

},

"sources_path": {

"title": "Sources Path",

"description": "Path to dbt sources JSON. See https://docs.getdbt.com/reference/artifacts/sources-json. If not specified, last-modified fields will not be populated. Note this can be a local file or a URI.",

"type": "string"

},

"run_results_paths": {

"title": "Run Results Paths",

"description": "Path to output of dbt test run as run_results files in JSON format. If not specified, test execution results and model performance metadata will not be populated in DataHub.If invoking dbt multiple times, you can provide paths to multiple run result files. See https://docs.getdbt.com/reference/artifacts/run-results-json.",

"default": [],

"type": "array",

"items": {

"type": "string"

}

},

"only_include_if_in_catalog": {

"title": "Only Include If In Catalog",

"description": "[experimental] If true, only include nodes that are also present in the catalog file. This is useful if you only want to include models that have been built by the associated run.",

"default": false,

"type": "boolean"

},

"aws_connection": {

"title": "Aws Connection",

"description": "When fetching manifest files from s3, configuration for aws connection details",

"allOf": [

{

"$ref": "#/definitions/AwsConnectionConfig"

}

]

},

"git_info": {

"title": "Git Info",

"description": "Reference to your git location to enable easy navigation from DataHub to your dbt files.",

"allOf": [

{

"$ref": "#/definitions/GitReference"

}

]

}

},

"required": [

"target_platform",

"manifest_path",

"catalog_path"

],

"additionalProperties": false,

"definitions": {

"DynamicTypedStateProviderConfig": {

"title": "DynamicTypedStateProviderConfig",

"type": "object",

"properties": {

"type": {

"title": "Type",

"description": "The type of the state provider to use. For DataHub use `datahub`",

"type": "string"

},

"config": {

"title": "Config",

"description": "The configuration required for initializing the state provider. Default: The datahub_api config if set at pipeline level. Otherwise, the default DatahubClientConfig. See the defaults (https://github.com/datahub-project/datahub/blob/master/metadata-ingestion/src/datahub/ingestion/graph/client.py#L19).",

"default": {},

"type": "object"

}

},

"required": [

"type"

],

"additionalProperties": false

},

"StatefulStaleMetadataRemovalConfig": {

"title": "StatefulStaleMetadataRemovalConfig",

"description": "Base specialized config for Stateful Ingestion with stale metadata removal capability.",

"type": "object",

"properties": {

"enabled": {

"title": "Enabled",

"description": "Whether or not to enable stateful ingest. Default: True if a pipeline_name is set and either a datahub-rest sink or `datahub_api` is specified, otherwise False",

"default": false,

"type": "boolean"

},

"remove_stale_metadata": {

"title": "Remove Stale Metadata",

"description": "Soft-deletes the entities present in the last successful run but missing in the current run with stateful_ingestion enabled.",

"default": true,

"type": "boolean"

}

},

"additionalProperties": false

},

"EmitDirective": {

"title": "EmitDirective",

"description": "A holder for directives for emission for specific types of entities",

"enum": [

"YES",

"NO",

"ONLY"

]

},

"DBTEntitiesEnabled": {

"title": "DBTEntitiesEnabled",

"description": "Controls which dbt entities are going to be emitted by this source",

"type": "object",

"properties": {

"models": {

"description": "Emit metadata for dbt models when set to Yes or Only",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

},

"sources": {

"description": "Emit metadata for dbt sources when set to Yes or Only",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

},

"seeds": {

"description": "Emit metadata for dbt seeds when set to Yes or Only",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

},

"snapshots": {

"description": "Emit metadata for dbt snapshots when set to Yes or Only",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

},

"test_definitions": {

"description": "Emit metadata for test definitions when enabled when set to Yes or Only",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

},

"test_results": {

"description": "Emit metadata for test results when set to Yes or Only",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

},

"model_performance": {

"description": "Emit model performance metadata when set to Yes or Only. Only supported with dbt core.",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

}

},

"additionalProperties": false

},

"AllowDenyPattern": {

"title": "AllowDenyPattern",

"description": "A class to store allow deny regexes",

"type": "object",

"properties": {

"allow": {

"title": "Allow",

"description": "List of regex patterns to include in ingestion",

"default": [

".*"

],

"type": "array",

"items": {

"type": "string"

}

},

"deny": {

"title": "Deny",

"description": "List of regex patterns to exclude from ingestion.",

"default": [],

"type": "array",

"items": {

"type": "string"

}

},

"ignoreCase": {

"title": "Ignorecase",

"description": "Whether to ignore case sensitivity during pattern matching.",

"default": true,

"type": "boolean"

}

},

"additionalProperties": false

},

"AwsAssumeRoleConfig": {

"title": "AwsAssumeRoleConfig",

"type": "object",

"properties": {

"RoleArn": {

"title": "Rolearn",

"description": "ARN of the role to assume.",

"type": "string"

},

"ExternalId": {

"title": "Externalid",

"description": "External ID to use when assuming the role.",

"type": "string"

}

},

"required": [

"RoleArn"

]

},

"AwsConnectionConfig": {

"title": "AwsConnectionConfig",

"description": "Common AWS credentials config.\n\nCurrently used by:\n - Glue source\n - SageMaker source\n - dbt source",

"type": "object",

"properties": {

"aws_access_key_id": {

"title": "Aws Access Key Id",

"description": "AWS access key ID. Can be auto-detected, see [the AWS boto3 docs](https://boto3.amazonaws.com/v1/documentation/api/latest/guide/credentials.html) for details.",

"type": "string"

},

"aws_secret_access_key": {

"title": "Aws Secret Access Key",

"description": "AWS secret access key. Can be auto-detected, see [the AWS boto3 docs](https://boto3.amazonaws.com/v1/documentation/api/latest/guide/credentials.html) for details.",

"type": "string"

},

"aws_session_token": {

"title": "Aws Session Token",

"description": "AWS session token. Can be auto-detected, see [the AWS boto3 docs](https://boto3.amazonaws.com/v1/documentation/api/latest/guide/credentials.html) for details.",

"type": "string"

},

"aws_role": {

"title": "Aws Role",

"description": "AWS roles to assume. If using the string format, the role ARN can be specified directly. If using the object format, the role can be specified in the RoleArn field and additional available arguments are the same as [boto3's STS.Client.assume_role](https://boto3.amazonaws.com/v1/documentation/api/latest/reference/services/sts.html?highlight=assume_role#STS.Client.assume_role).",

"anyOf": [

{

"type": "string"

},

{

"type": "array",

"items": {

"anyOf": [

{

"type": "string"

},

{

"$ref": "#/definitions/AwsAssumeRoleConfig"

}

]

}

}

]

},

"aws_profile": {

"title": "Aws Profile",

"description": "The [named profile](https://docs.aws.amazon.com/cli/latest/userguide/cli-configure-profiles.html) to use from AWS credentials. Falls back to default profile if not specified and no access keys provided. Profiles are configured in ~/.aws/credentials or ~/.aws/config.",

"type": "string"

},

"aws_region": {

"title": "Aws Region",

"description": "AWS region code.",

"type": "string"

},

"aws_endpoint_url": {

"title": "Aws Endpoint Url",

"description": "The AWS service endpoint. This is normally [constructed automatically](https://boto3.amazonaws.com/v1/documentation/api/latest/reference/core/session.html), but can be overridden here.",

"type": "string"

},

"aws_proxy": {

"title": "Aws Proxy",

"description": "A set of proxy configs to use with AWS. See the [botocore.config](https://botocore.amazonaws.com/v1/documentation/api/latest/reference/config.html) docs for details.",

"type": "object",

"additionalProperties": {

"type": "string"

}

},

"aws_retry_num": {

"title": "Aws Retry Num",

"description": "Number of times to retry failed AWS requests. See the [botocore.retry](https://boto3.amazonaws.com/v1/documentation/api/latest/guide/retries.html) docs for details.",

"default": 5,

"type": "integer"

},

"aws_retry_mode": {

"title": "Aws Retry Mode",

"description": "Retry mode to use for failed AWS requests. See the [botocore.retry](https://boto3.amazonaws.com/v1/documentation/api/latest/guide/retries.html) docs for details.",

"default": "standard",

"enum": [

"legacy",

"standard",

"adaptive"

],

"type": "string"

},

"read_timeout": {

"title": "Read Timeout",

"description": "The timeout for reading from the connection (in seconds).",

"default": 60,

"type": "number"

},

"aws_advanced_config": {

"title": "Aws Advanced Config",

"description": "Advanced AWS configuration options. These are passed directly to [botocore.config.Config](https://botocore.amazonaws.com/v1/documentation/api/latest/reference/config.html).",

"type": "object"

}

},

"additionalProperties": false

},

"GitReference": {

"title": "GitReference",

"description": "Reference to a hosted Git repository. Used to generate \"view source\" links.",

"type": "object",

"properties": {

"repo": {

"title": "Repo",

"description": "Name of your Git repo e.g. https://github.com/datahub-project/datahub or https://gitlab.com/gitlab-org/gitlab. If organization/repo is provided, we assume it is a GitHub repo.",

"type": "string"

},

"branch": {

"title": "Branch",

"description": "Branch on which your files live by default. Typically main or master. This can also be a commit hash.",

"default": "main",

"type": "string"

},

"url_subdir": {

"title": "Url Subdir",

"description": "Prefix to prepend when generating URLs for files - useful when files are in a subdirectory. Only affects URL generation, not git operations.",

"type": "string"

},

"url_template": {

"title": "Url Template",

"description": "Template for generating a URL to a file in the repo e.g. '{repo_url}/blob/{branch}/{file_path}'. We can infer this for GitHub and GitLab repos, and it is otherwise required.It supports the following variables: {repo_url}, {branch}, {file_path}",

"type": "string"

}

},

"required": [

"repo"

],

"additionalProperties": false

}

}

}

dbt meta automated mappings

dbt allows authors to define meta properties for datasets. Checkout this link to know more - dbt meta. Our dbt source allows users to define

actions such as add a tag, term or owner. For example if a dbt model has a meta config "has_pii": True, we can define an action

that evaluates if the property is set to true and add, lets say, a pii tag.

To leverage this feature we require users to define mappings as part of the recipe. The following section describes how you can build these mappings. Listed below is a meta_mapping and column_meta_mapping section that among other things, looks for keys like business_owner and adds owners that are listed there.

meta_mapping:

business_owner:

match: ".*"

operation: "add_owner"

config:

owner_type: user

owner_category: BUSINESS_OWNER

has_pii:

match: True

operation: "add_tag"

config:

tag: "has_pii_test"

int_property:

match: 1

operation: "add_tag"

config:

tag: "int_meta_property"

double_property:

match: 2.5

operation: "add_term"

config:

term: "double_meta_property"

data_governance.team_owner:

match: "Finance"

operation: "add_term"

config:

term: "Finance_test"

terms_list:

match: ".*"

operation: "add_terms"

config:

separator: ","

documentation_link:

match: "(?:https?)?\:\/\/\w*[^#]*"

operation: "add_doc_link"

config:

link: {{ $match }}

description: "Documentation Link"

column_meta_mapping:

terms_list:

match: ".*"

operation: "add_terms"

config:

separator: ","

is_sensitive:

match: True

operation: "add_tag"

config:

tag: "sensitive"

We support the following operations:

add_tag - Requires

tagproperty in config.add_term - Requires

termproperty in config.add_terms - Accepts an optional

separatorproperty in config.add_owner - Requires

owner_typeproperty in config which can be eitheruserorgroup. Optionally accepts theowner_categoryconfig property which can be set to either a custom ownership type urn likeurn:li:ownershipType:architector one of['TECHNICAL_OWNER', 'BUSINESS_OWNER', 'DATA_STEWARD', 'DATAOWNER'(defaults toDATAOWNER).- The

owner_typeproperty will be ignored if the owner is a fully qualified urn. - You can use commas to specify multiple owners - e.g.

business_owner: "jane,john,urn:li:corpGroup:data-team".

- The

add_doc_link - Requires

linkanddescriptionproperties in config. Upon ingestion run, this will overwrite current links in the institutional knowledge section with this new link. The anchor text is defined here in the meta_mappings asdescription.

Note:

- The dbt

meta_mappingconfig works at the model level, while thecolumn_meta_mappingconfig works at the column level. Theadd_owneroperation is not supported at the column level. - For string meta properties we support regex matching.

With regex matching, you can also use the matched value to customize how you populate the tag, term or owner fields. Here are a few advanced examples:

Data Tier - Bronze, Silver, Gold

If your meta section looks like this:

meta:

data_tier: Bronze # chosen from [Bronze,Gold,Silver]

and you wanted to attach a glossary term like urn:li:glossaryTerm:Bronze for all the models that have this value in the meta section attached to them, the following meta_mapping section would achieve that outcome:

meta_mapping:

data_tier:

match: "Bronze|Silver|Gold"

operation: "add_term"

config:

term: "{{ $match }}"

to match any data_tier of Bronze, Silver or Gold and maps it to a glossary term with the same name.

Case Numbers - create tags

If your meta section looks like this:

meta:

case: PLT-4678 # internal Case Number

and you want to generate tags that look like case_4678 from this, you can use the following meta_mapping section:

meta_mapping:

case:

match: "PLT-(.*)"

operation: "add_tag"

config:

tag: "case_{{ $match }}"

Stripping out leading @ sign

You can also match specific groups within the value to extract subsets of the matched value. e.g. if you have a meta section that looks like this:

meta:

owner: "@finance-team"

business_owner: "@janet"

and you want to mark the finance-team as a group that owns the dataset (skipping the leading @ sign), while marking janet as an individual user (again, skipping the leading @ sign) that owns the dataset, you can use the following meta-mapping section.

meta_mapping:

owner:

match: "^@(.*)"

operation: "add_owner"

config:

owner_type: group

business_owner:

match: "^@(?P<owner>(.*))"

operation: "add_owner"

config:

owner_type: user

owner_category: BUSINESS_OWNER

In the examples above, we show two ways of writing the matching regexes. In the first one, ^@(.*) the first matching group (a.k.a. match.group(1)) is automatically inferred. In the second example, ^@(?P<owner>(.*)), we use a named matching group (called owner, since we are matching an owner) to capture the string we want to provide to the ownership urn.

dbt query_tag automated mappings

This works similarly as the dbt meta mapping but for the query tags

We support the below actions -

- add_tag - Requires

tagproperty in config.

The below example set as global tag the query tag tag key's value.

"query_tag_mapping":

{

"tag":

"match": ".*"

"operation": "add_tag"

"config":

"tag": "{{ $match }}"

}

Integrating with dbt test

To integrate with dbt tests, the dbt source needs access to the run_results.json file generated after a dbt test or dbt build execution. Typically, this is written to the target directory. A common pattern you can follow is:

- Run

dbt build - Copy the

target/run_results.jsonfile to a separate location. This is important, because otherwise subsequentdbtcommands will overwrite the run results. - Run

dbt docs generateto generate themanifest.jsonandcatalog.jsonfiles - The dbt source makes use of the manifest, catalog, and run results file, and hence will need to be moved to a location accessible to the

dbtsource (e.g. s3 or local file system). In the ingestion recipe, therun_results_pathsconfig must be set to the location of therun_results.jsonfile from thedbt buildordbt testrun.

The connector will produce the following things:

- Assertion definitions that are attached to the dataset (or datasets)

- Results from running the tests attached to the timeline of the dataset

The most common reason for missing test results is that the run_results.json with the test result information is getting overwritten by a subsequent dbt command. We recommend copying the run_results.json file before running other dbt commands.

dbt source snapshot-freshness

dbt build

cp target/run_results.json target/run_results_backup.json

dbt docs generate

cp target/run_results_backup.json target/run_results.json

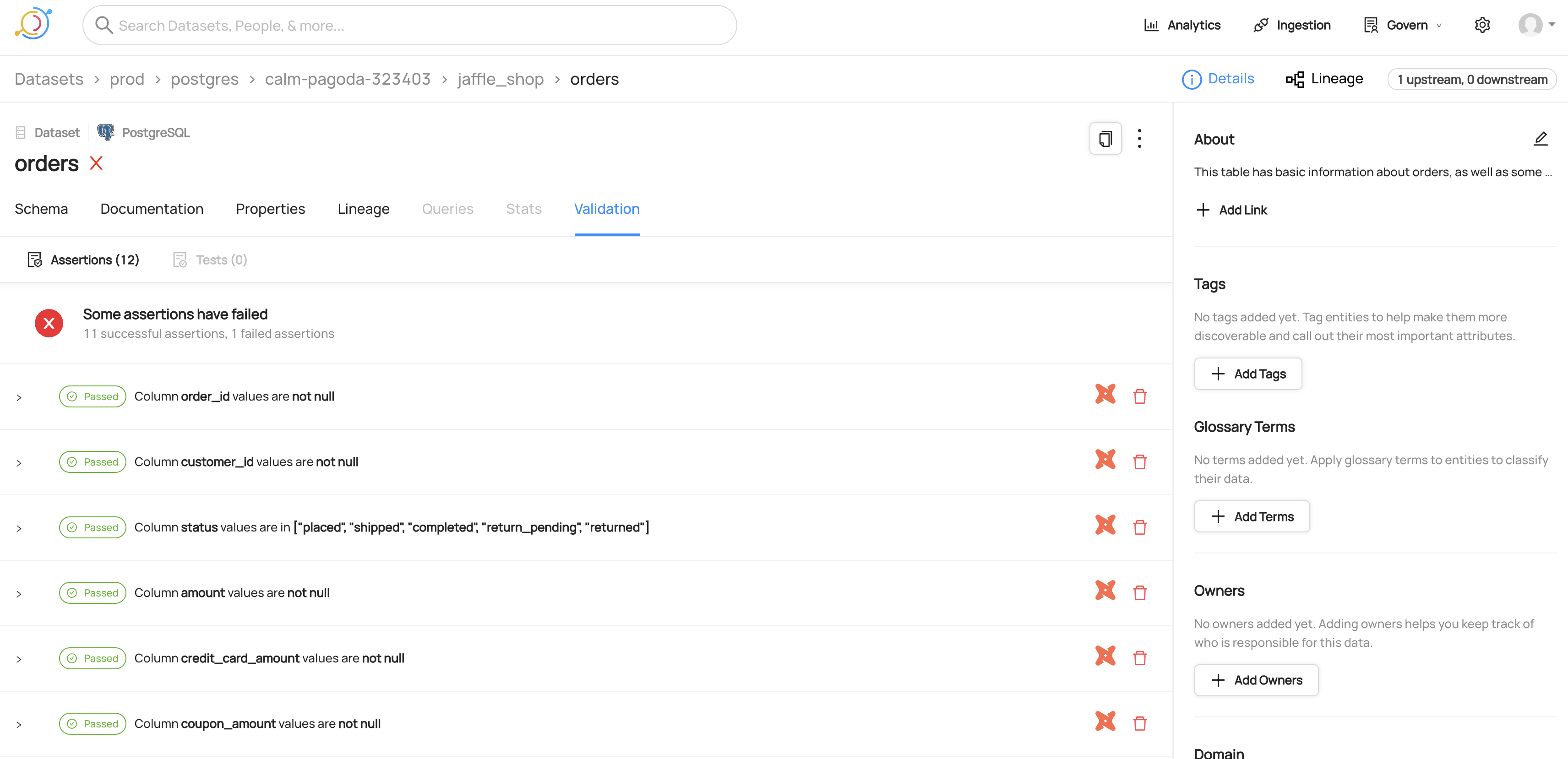

View of dbt tests for a dataset

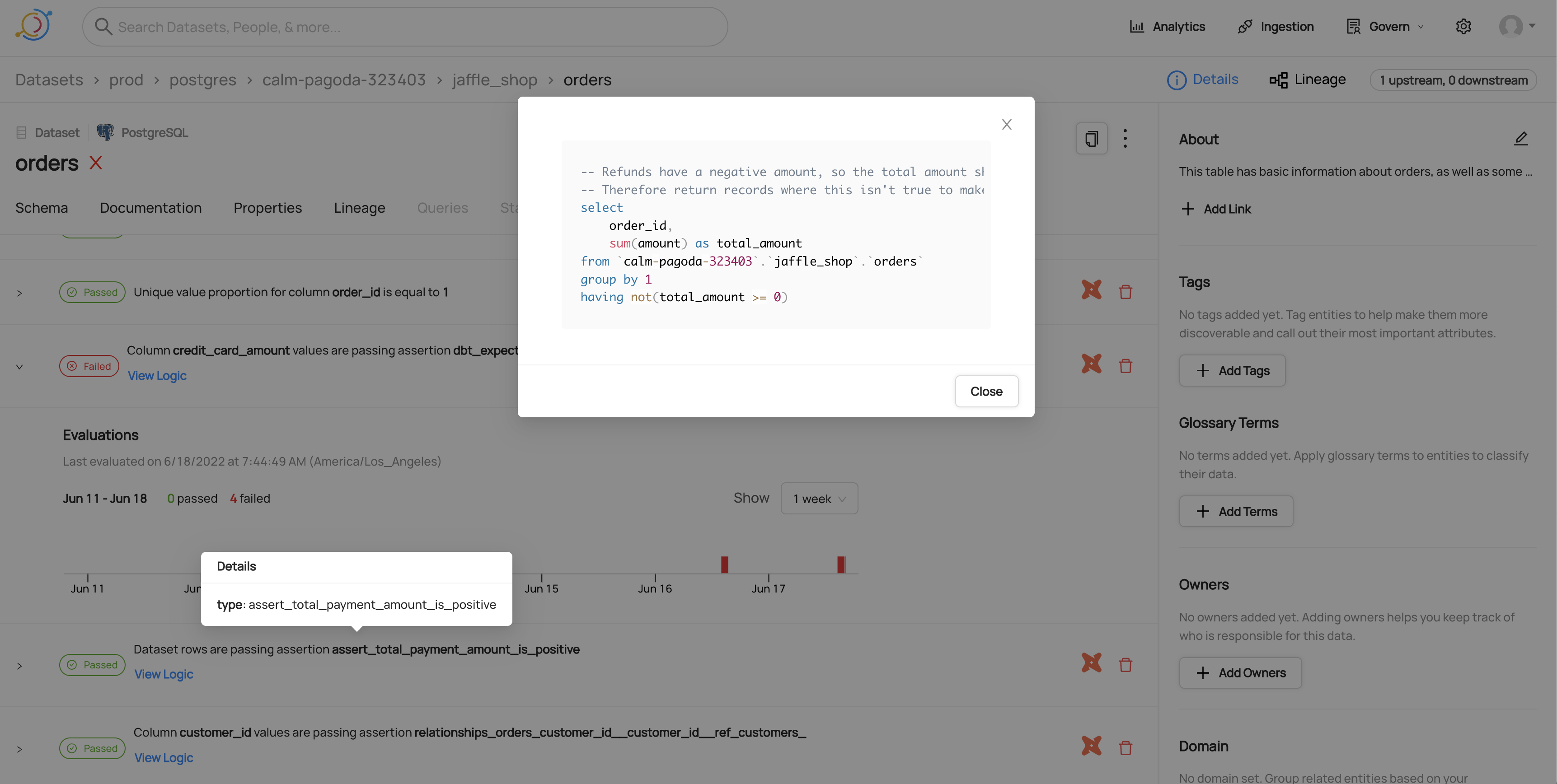

Viewing the SQL for a dbt test

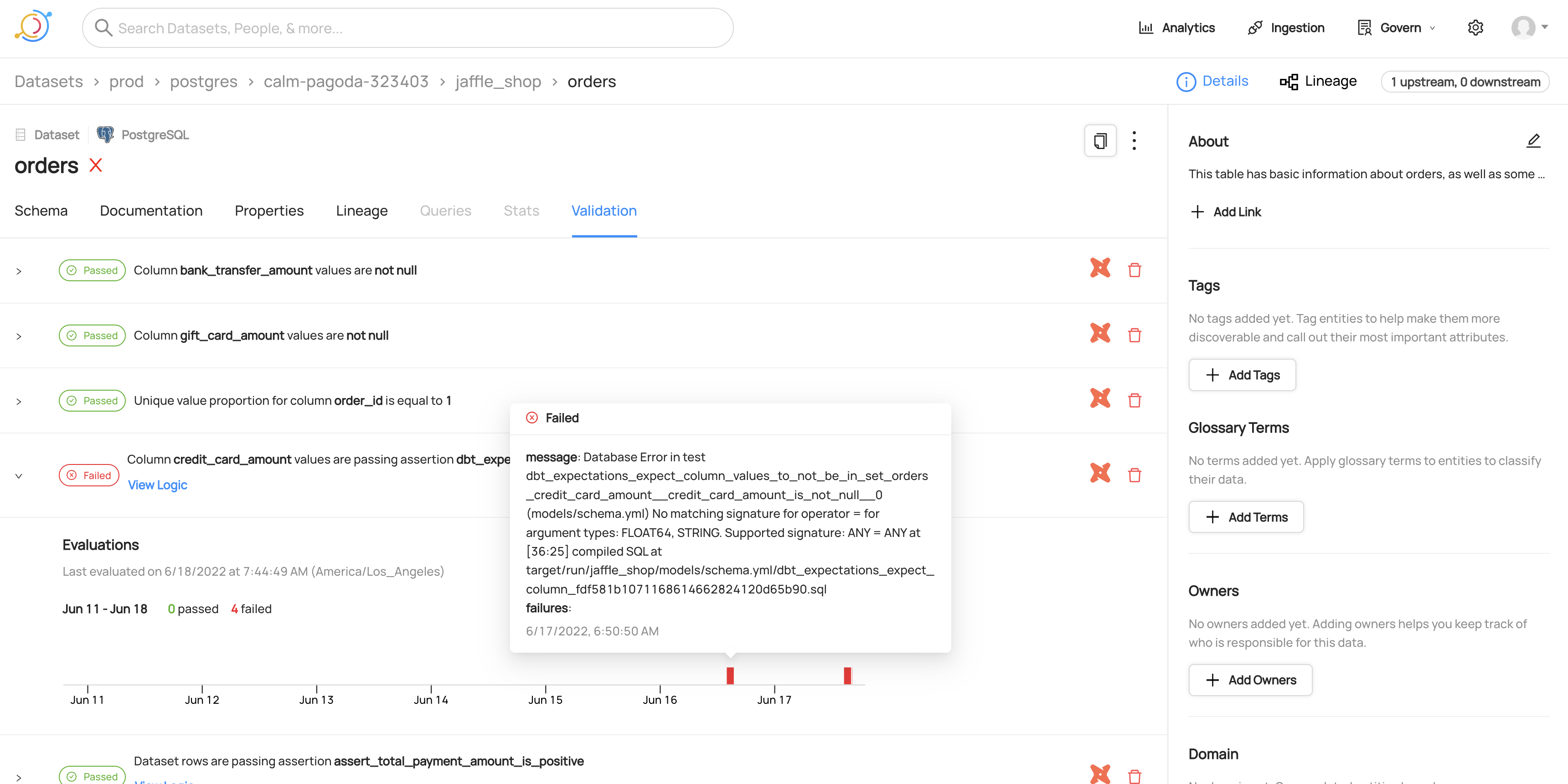

Viewing timeline for a failed dbt test

Separating test result emission from other metadata emission

You can segregate emission of test results from the emission of other dbt metadata using the entities_enabled config flag.

The following recipe shows you how to emit only test results.

source:

type: dbt

config:

manifest_path: _path_to_manifest_json

catalog_path: _path_to_catalog_json

run_results_paths:

- _path_to_run_results_json

target_platform: postgres

entities_enabled:

test_results: Only

Similarly, the following recipe shows you how to emit everything (i.e. models, sources, seeds, test definitions) but not test results:

source:

type: dbt

config:

manifest_path: _path_to_manifest_json

catalog_path: _path_to_catalog_json

run_results_paths:

- _path_to_run_results_json

target_platform: postgres

entities_enabled:

test_results: No

Multiple dbt projects

In more complex dbt setups, you may have multiple dbt projects, where models from one project are used as sources in another project. DataHub supports this setup natively.

Each dbt project should have its own dbt ingestion recipe, and the platform_instance field in the recipe should be set to the dbt project name.

For example, if you have two dbt projects analytics and data_mart, you would have two ingestion recipes.

If you have models in the data_mart project that are used as sources in the analytics project, the lineage will be automatically captured.

# Analytics dbt project

source:

type: dbt

config:

platform_instance: analytics

target_platform: postgres

manifest_path: analytics/target/manifest.json

catalog_path: analytics/target/catalog.json

# ... other configs

# Data Mart dbt project

source:

type: dbt

config:

platform_instance: data_mart

target_platform: postgres

manifest_path: data_mart/target/manifest.json

catalog_path: data_mart/target/catalog.json

# ... other configs

If you have models that have tons of sources from other projects listed in the "Composed Of" section, it may also make sense to hide sources.

Reducing "composed of" sprawl by hiding sources

When many dbt projects use a single table as a source, the "Composed Of" relationships can become very large and difficult to navigate and extra source nodes can clutter the lineage graph.

This is particularly useful for multi-project setups, but can be useful in single-project setups as well.

The benefit is that your entire dbt estate becomes much easier to navigate, and the borders between projects less noticeable. The downside is that we will not pick up any documentation or meta mappings applied to dbt sources.

To enable this, set entities_enabled.sources: No and skip_sources_in_lineage: true in your dbt source config:

source:

type: dbt

config:

platform_instance: analytics

target_platform: postgres

manifest_path: analytics/target/manifest.json

catalog_path: analytics/target/catalog.json

# ... other configs

entities_enabled:

sources: No

skip_sources_in_lineage: true

[Experimental] It's also possible to use skip_sources_in_lineage: true without disabling sources entirely. If you do this, sources will not participate in the lineage graph - they'll have upstreams but no downstreams. However, they will still contribute to docs, tags, etc to the warehouse entity.

Code Coordinates

- Class Name:

datahub.ingestion.source.dbt.dbt_core.DBTCoreSource - Browse on GitHub

Module dbt-cloud

Important Capabilities

| Capability | Status | Notes |

|---|---|---|

| Column-level Lineage | ✅ | Enabled by default, configure using include_column_lineage |

| Detect Deleted Entities | ✅ | Enabled via stateful ingestion |

| Table-Level Lineage | ✅ | Enabled by default |

Setup

This source pulls dbt metadata directly from the dbt Cloud APIs.

Create a service account token with the "Metadata Only" permission. This is a read-only permission.

You'll need to have a dbt Cloud job set up to run your dbt project, and "Generate docs on run" should be enabled.

To get the required IDs, go to the job details page (this is the one with the "Run History" table), and look at the URL. It should look something like this: https://cloud.getdbt.com/next/deploy/107298/projects/175705/jobs/148094. In this example, the account ID is 107298, the project ID is 175705, and the job ID is 148094.

CLI based Ingestion

Install the Plugin

The dbt-cloud source works out of the box with acryl-datahub.

Starter Recipe

Check out the following recipe to get started with ingestion! See below for full configuration options.

For general pointers on writing and running a recipe, see our main recipe guide.

source:

type: "dbt-cloud"

config:

token: ${DBT_CLOUD_TOKEN}

# In the URL https://cloud.getdbt.com/next/deploy/107298/projects/175705/jobs/148094,

# 107298 is the account_id, 175705 is the project_id, and 148094 is the job_id

account_id: "${DBT_ACCOUNT_ID}" # set to your dbt cloud account id

project_id: "${DBT_PROJECT_ID}" # set to your dbt cloud project id

job_id: "${DBT_JOB_ID}" # set to your dbt cloud job id

run_id: # set to your dbt cloud run id. This is optional, and defaults to the latest run

target_platform: postgres

# Options

target_platform: "${TARGET_PLATFORM_ID}" # e.g. bigquery/postgres/etc.

# sink configs

Config Details

- Options

- Schema

Note that a . is used to denote nested fields in the YAML recipe.

| Field | Description |

|---|---|

account_id ✅ integer | The DBT Cloud account ID to use. |

job_id ✅ integer | The ID of the job to ingest metadata from. |

project_id ✅ integer | The dbt Cloud project ID to use. |

target_platform ✅ string | The platform that dbt is loading onto. (e.g. bigquery / redshift / postgres etc.) |

token ✅ string | The API token to use to authenticate with DBT Cloud. |

access_url string | The base URL of the dbt Cloud instance to use. This should be the URL you use to access the dbt Cloud UI. It should include the scheme (http/https) and not include a trailing slash. See the access url for your dbt Cloud region here: https://docs.getdbt.com/docs/cloud/about-cloud/regions-ip-addresses Default: https://cloud.getdbt.com |

column_meta_mapping object | mapping rules that will be executed against dbt column meta properties. Refer to the section below on dbt meta automated mappings. Default: {} |

convert_column_urns_to_lowercase boolean | When enabled, converts column URNs to lowercase to ensure cross-platform compatibility. If target_platform is Snowflake, the default is True. Default: False |

enable_meta_mapping boolean | When enabled, applies the mappings that are defined through the meta_mapping directives. Default: True |

enable_owner_extraction boolean | When enabled, ownership info will be extracted from the dbt meta Default: True |

enable_query_tag_mapping boolean | When enabled, applies the mappings that are defined through the query_tag_mapping directives. Default: True |

include_column_lineage boolean | When enabled, column-level lineage will be extracted from the dbt node definition. Requires infer_dbt_schemas to be enabled. If you run into issues where the column name casing does not match up with properly, providing a datahub_api or using the rest sink will improve accuracy. Default: True |

include_compiled_code boolean | When enabled, includes the compiled code in the emitted metadata. Default: True |

include_env_in_assertion_guid boolean | Prior to version 0.9.4.2, the assertion GUIDs did not include the environment. If you're using multiple dbt ingestion that are only distinguished by env, then you should set this flag to True. Default: False |

incremental_lineage boolean | When enabled, emits incremental/patch lineage for non-dbt entities. When disabled, re-states lineage on each run. Default: True |

infer_dbt_schemas boolean | When enabled, schemas will be inferred from the dbt node definition. Default: True |

meta_mapping object | mapping rules that will be executed against dbt meta properties. Refer to the section below on dbt meta automated mappings. Default: {} |

metadata_endpoint string | The dbt Cloud metadata API endpoint. If not provided, we will try to infer it from the access_url. |

owner_extraction_pattern string | Regex string to extract owner from the dbt node using the (?P<name>...) syntax of the match object, where the group name must be owner. Examples: (1)r"(?P<owner>(.*)): (\w+) (\w+)" will extract jdoe as the owner from "jdoe: John Doe" (2) r"@(?P<owner>(.*))" will extract alice as the owner from "@alice". |

platform_instance string | The instance of the platform that all assets produced by this recipe belong to. This should be unique within the platform. See https://datahubproject.io/docs/platform-instances/ for more details. |

prefer_sql_parser_lineage boolean | Normally we use dbt's metadata to generate table lineage. When enabled, we prefer results from the SQL parser when generating lineage instead. This can be useful when dbt models reference tables directly, instead of using the ref() macro. This requires that skip_sources_in_lineage is enabled. Default: False |

query_tag_mapping object | mapping rules that will be executed against dbt query_tag meta properties. Refer to the section below on dbt meta automated mappings. Default: {} |

run_id integer | The ID of the run to ingest metadata from. If not specified, we'll default to the latest run. |

skip_sources_in_lineage boolean | [Experimental] When enabled, dbt sources will not be included in the lineage graph. Requires that entities_enabled.sources is set to NO. This is mainly useful when you have multiple, interdependent dbt projects. Default: False |

strip_user_ids_from_email boolean | Whether or not to strip email id while adding owners using dbt meta actions. Default: False |

tag_prefix string | Prefix added to tags during ingestion. Default: dbt: |

target_platform_instance string | The platform instance for the platform that dbt is operating on. Use this if you have multiple instances of the same platform (e.g. redshift) and need to distinguish between them. |

test_warnings_are_errors boolean | When enabled, dbt test warnings will be treated as failures. Default: False |

use_identifiers boolean | Use model identifier instead of model name if defined (if not, default to model name). Default: False |

write_semantics string | Whether the new tags, terms and owners to be added will override the existing ones added only by this source or not. Value for this config can be "PATCH" or "OVERRIDE" Default: PATCH |

env string | Environment to use in namespace when constructing URNs. Default: PROD |

entities_enabled DBTEntitiesEnabled | Controls for enabling / disabling metadata emission for different dbt entities (models, test definitions, test results, etc.) Default: {'models': 'YES', 'sources': 'YES', 'seeds': 'YES'... |

entities_enabled.model_performance Enum | Emit model performance metadata when set to Yes or Only. Only supported with dbt core. Default: YES |

entities_enabled.models Enum | Emit metadata for dbt models when set to Yes or Only Default: YES |

entities_enabled.seeds Enum | Emit metadata for dbt seeds when set to Yes or Only Default: YES |

entities_enabled.snapshots Enum | Emit metadata for dbt snapshots when set to Yes or Only Default: YES |

entities_enabled.sources Enum | Emit metadata for dbt sources when set to Yes or Only Default: YES |

entities_enabled.test_definitions Enum | Emit metadata for test definitions when enabled when set to Yes or Only Default: YES |

entities_enabled.test_results Enum | Emit metadata for test results when set to Yes or Only Default: YES |

node_name_pattern AllowDenyPattern | regex patterns for dbt model names to filter in ingestion. Default: {'allow': ['.*'], 'deny': [], 'ignoreCase': True} |

node_name_pattern.ignoreCase boolean | Whether to ignore case sensitivity during pattern matching. Default: True |

node_name_pattern.allow array | List of regex patterns to include in ingestion Default: ['.*'] |

node_name_pattern.allow.string string | |

node_name_pattern.deny array | List of regex patterns to exclude from ingestion. Default: [] |

node_name_pattern.deny.string string | |

stateful_ingestion StatefulStaleMetadataRemovalConfig | DBT Stateful Ingestion Config. |

stateful_ingestion.enabled boolean | Whether or not to enable stateful ingest. Default: True if a pipeline_name is set and either a datahub-rest sink or datahub_api is specified, otherwise False Default: False |

stateful_ingestion.remove_stale_metadata boolean | Soft-deletes the entities present in the last successful run but missing in the current run with stateful_ingestion enabled. Default: True |

The JSONSchema for this configuration is inlined below.

{

"title": "DBTCloudConfig",

"description": "Base configuration class for stateful ingestion for source configs to inherit from.",

"type": "object",

"properties": {

"incremental_lineage": {

"title": "Incremental Lineage",

"description": "When enabled, emits incremental/patch lineage for non-dbt entities. When disabled, re-states lineage on each run.",

"default": true,

"type": "boolean"

},

"env": {

"title": "Env",

"description": "Environment to use in namespace when constructing URNs.",

"default": "PROD",

"type": "string"

},

"platform_instance": {

"title": "Platform Instance",

"description": "The instance of the platform that all assets produced by this recipe belong to. This should be unique within the platform. See https://datahubproject.io/docs/platform-instances/ for more details.",

"type": "string"

},

"stateful_ingestion": {

"title": "Stateful Ingestion",

"description": "DBT Stateful Ingestion Config.",

"allOf": [

{

"$ref": "#/definitions/StatefulStaleMetadataRemovalConfig"

}

]

},

"target_platform": {

"title": "Target Platform",

"description": "The platform that dbt is loading onto. (e.g. bigquery / redshift / postgres etc.)",

"type": "string"

},

"target_platform_instance": {

"title": "Target Platform Instance",

"description": "The platform instance for the platform that dbt is operating on. Use this if you have multiple instances of the same platform (e.g. redshift) and need to distinguish between them.",

"type": "string"

},

"use_identifiers": {

"title": "Use Identifiers",

"description": "Use model identifier instead of model name if defined (if not, default to model name).",

"default": false,

"type": "boolean"

},

"entities_enabled": {

"title": "Entities Enabled",

"description": "Controls for enabling / disabling metadata emission for different dbt entities (models, test definitions, test results, etc.)",

"default": {

"models": "YES",

"sources": "YES",

"seeds": "YES",

"snapshots": "YES",

"test_definitions": "YES",

"test_results": "YES",

"model_performance": "YES"

},

"allOf": [

{

"$ref": "#/definitions/DBTEntitiesEnabled"

}

]

},

"prefer_sql_parser_lineage": {

"title": "Prefer Sql Parser Lineage",

"description": "Normally we use dbt's metadata to generate table lineage. When enabled, we prefer results from the SQL parser when generating lineage instead. This can be useful when dbt models reference tables directly, instead of using the ref() macro. This requires that `skip_sources_in_lineage` is enabled.",

"default": false,

"type": "boolean"

},

"skip_sources_in_lineage": {

"title": "Skip Sources In Lineage",

"description": "[Experimental] When enabled, dbt sources will not be included in the lineage graph. Requires that `entities_enabled.sources` is set to `NO`. This is mainly useful when you have multiple, interdependent dbt projects. ",

"default": false,

"type": "boolean"

},

"tag_prefix": {

"title": "Tag Prefix",

"description": "Prefix added to tags during ingestion.",

"default": "dbt:",

"type": "string"

},

"node_name_pattern": {

"title": "Node Name Pattern",

"description": "regex patterns for dbt model names to filter in ingestion.",

"default": {

"allow": [

".*"

],

"deny": [],

"ignoreCase": true

},

"allOf": [

{

"$ref": "#/definitions/AllowDenyPattern"

}

]

},

"meta_mapping": {

"title": "Meta Mapping",

"description": "mapping rules that will be executed against dbt meta properties. Refer to the section below on dbt meta automated mappings.",

"default": {},

"type": "object"

},

"column_meta_mapping": {

"title": "Column Meta Mapping",

"description": "mapping rules that will be executed against dbt column meta properties. Refer to the section below on dbt meta automated mappings.",

"default": {},

"type": "object"

},

"enable_meta_mapping": {

"title": "Enable Meta Mapping",

"description": "When enabled, applies the mappings that are defined through the meta_mapping directives.",

"default": true,

"type": "boolean"

},

"query_tag_mapping": {

"title": "Query Tag Mapping",

"description": "mapping rules that will be executed against dbt query_tag meta properties. Refer to the section below on dbt meta automated mappings.",

"default": {},

"type": "object"

},

"enable_query_tag_mapping": {

"title": "Enable Query Tag Mapping",

"description": "When enabled, applies the mappings that are defined through the `query_tag_mapping` directives.",

"default": true,

"type": "boolean"

},

"write_semantics": {

"title": "Write Semantics",

"description": "Whether the new tags, terms and owners to be added will override the existing ones added only by this source or not. Value for this config can be \"PATCH\" or \"OVERRIDE\"",

"default": "PATCH",

"type": "string"

},

"strip_user_ids_from_email": {

"title": "Strip User Ids From Email",

"description": "Whether or not to strip email id while adding owners using dbt meta actions.",

"default": false,

"type": "boolean"

},

"enable_owner_extraction": {

"title": "Enable Owner Extraction",

"description": "When enabled, ownership info will be extracted from the dbt meta",

"default": true,

"type": "boolean"

},

"owner_extraction_pattern": {

"title": "Owner Extraction Pattern",

"description": "Regex string to extract owner from the dbt node using the `(?P<name>...) syntax` of the [match object](https://docs.python.org/3/library/re.html#match-objects), where the group name must be `owner`. Examples: (1)`r\"(?P<owner>(.*)): (\\w+) (\\w+)\"` will extract `jdoe` as the owner from `\"jdoe: John Doe\"` (2) `r\"@(?P<owner>(.*))\"` will extract `alice` as the owner from `\"@alice\"`.",

"type": "string"

},

"include_env_in_assertion_guid": {

"title": "Include Env In Assertion Guid",

"description": "Prior to version 0.9.4.2, the assertion GUIDs did not include the environment. If you're using multiple dbt ingestion that are only distinguished by env, then you should set this flag to True.",

"default": false,

"type": "boolean"

},

"convert_column_urns_to_lowercase": {

"title": "Convert Column Urns To Lowercase",

"description": "When enabled, converts column URNs to lowercase to ensure cross-platform compatibility. If `target_platform` is Snowflake, the default is True.",

"default": false,

"type": "boolean"

},

"test_warnings_are_errors": {

"title": "Test Warnings Are Errors",

"description": "When enabled, dbt test warnings will be treated as failures.",

"default": false,

"type": "boolean"

},

"infer_dbt_schemas": {

"title": "Infer Dbt Schemas",

"description": "When enabled, schemas will be inferred from the dbt node definition.",

"default": true,

"type": "boolean"

},

"include_column_lineage": {

"title": "Include Column Lineage",

"description": "When enabled, column-level lineage will be extracted from the dbt node definition. Requires `infer_dbt_schemas` to be enabled. If you run into issues where the column name casing does not match up with properly, providing a datahub_api or using the rest sink will improve accuracy.",

"default": true,

"type": "boolean"

},

"include_compiled_code": {

"title": "Include Compiled Code",

"description": "When enabled, includes the compiled code in the emitted metadata.",

"default": true,

"type": "boolean"

},

"access_url": {

"title": "Access Url",

"description": "The base URL of the dbt Cloud instance to use. This should be the URL you use to access the dbt Cloud UI. It should include the scheme (http/https) and not include a trailing slash. See the access url for your dbt Cloud region here: https://docs.getdbt.com/docs/cloud/about-cloud/regions-ip-addresses",

"default": "https://cloud.getdbt.com",

"type": "string"

},

"metadata_endpoint": {

"title": "Metadata Endpoint",

"description": "The dbt Cloud metadata API endpoint. If not provided, we will try to infer it from the access_url.",

"default": "https://metadata.cloud.getdbt.com/graphql",

"type": "string"

},

"token": {

"title": "Token",

"description": "The API token to use to authenticate with DBT Cloud.",

"type": "string"

},

"account_id": {

"title": "Account Id",

"description": "The DBT Cloud account ID to use.",

"type": "integer"

},

"project_id": {

"title": "Project Id",

"description": "The dbt Cloud project ID to use.",

"type": "integer"

},

"job_id": {

"title": "Job Id",

"description": "The ID of the job to ingest metadata from.",

"type": "integer"

},

"run_id": {

"title": "Run Id",

"description": "The ID of the run to ingest metadata from. If not specified, we'll default to the latest run.",

"type": "integer"

}

},

"required": [

"target_platform",

"token",

"account_id",

"project_id",

"job_id"

],

"additionalProperties": false,

"definitions": {

"DynamicTypedStateProviderConfig": {

"title": "DynamicTypedStateProviderConfig",

"type": "object",

"properties": {

"type": {

"title": "Type",

"description": "The type of the state provider to use. For DataHub use `datahub`",

"type": "string"

},

"config": {

"title": "Config",

"description": "The configuration required for initializing the state provider. Default: The datahub_api config if set at pipeline level. Otherwise, the default DatahubClientConfig. See the defaults (https://github.com/datahub-project/datahub/blob/master/metadata-ingestion/src/datahub/ingestion/graph/client.py#L19).",

"default": {},

"type": "object"

}

},

"required": [

"type"

],

"additionalProperties": false

},

"StatefulStaleMetadataRemovalConfig": {

"title": "StatefulStaleMetadataRemovalConfig",

"description": "Base specialized config for Stateful Ingestion with stale metadata removal capability.",

"type": "object",

"properties": {

"enabled": {

"title": "Enabled",

"description": "Whether or not to enable stateful ingest. Default: True if a pipeline_name is set and either a datahub-rest sink or `datahub_api` is specified, otherwise False",

"default": false,

"type": "boolean"

},

"remove_stale_metadata": {

"title": "Remove Stale Metadata",

"description": "Soft-deletes the entities present in the last successful run but missing in the current run with stateful_ingestion enabled.",

"default": true,

"type": "boolean"

}

},

"additionalProperties": false

},

"EmitDirective": {

"title": "EmitDirective",

"description": "A holder for directives for emission for specific types of entities",

"enum": [

"YES",

"NO",

"ONLY"

]

},

"DBTEntitiesEnabled": {

"title": "DBTEntitiesEnabled",

"description": "Controls which dbt entities are going to be emitted by this source",

"type": "object",

"properties": {

"models": {

"description": "Emit metadata for dbt models when set to Yes or Only",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

},

"sources": {

"description": "Emit metadata for dbt sources when set to Yes or Only",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

},

"seeds": {

"description": "Emit metadata for dbt seeds when set to Yes or Only",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

},

"snapshots": {

"description": "Emit metadata for dbt snapshots when set to Yes or Only",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

},

"test_definitions": {

"description": "Emit metadata for test definitions when enabled when set to Yes or Only",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

},

"test_results": {

"description": "Emit metadata for test results when set to Yes or Only",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

},

"model_performance": {

"description": "Emit model performance metadata when set to Yes or Only. Only supported with dbt core.",

"default": "YES",

"allOf": [

{

"$ref": "#/definitions/EmitDirective"

}

]

}

},

"additionalProperties": false

},

"AllowDenyPattern": {

"title": "AllowDenyPattern",

"description": "A class to store allow deny regexes",

"type": "object",

"properties": {

"allow": {

"title": "Allow",

"description": "List of regex patterns to include in ingestion",

"default": [

".*"

],

"type": "array",

"items": {

"type": "string"

}

},

"deny": {

"title": "Deny",

"description": "List of regex patterns to exclude from ingestion.",

"default": [],

"type": "array",

"items": {

"type": "string"

}

},

"ignoreCase": {

"title": "Ignorecase",

"description": "Whether to ignore case sensitivity during pattern matching.",

"default": true,

"type": "boolean"

}

},

"additionalProperties": false

}

}

}

Code Coordinates

- Class Name:

datahub.ingestion.source.dbt.dbt_cloud.DBTCloudSource - Browse on GitHub

Questions

If you've got any questions on configuring ingestion for dbt, feel free to ping us on our Slack.